As a consultant and Data Engineer I’ve lost count of the number of times I’ve been asked “how do we do DevOps for Databricks?”, and the simple answer is, it depends. I know this sounds like a typical consultant’s response, but please bear with me.

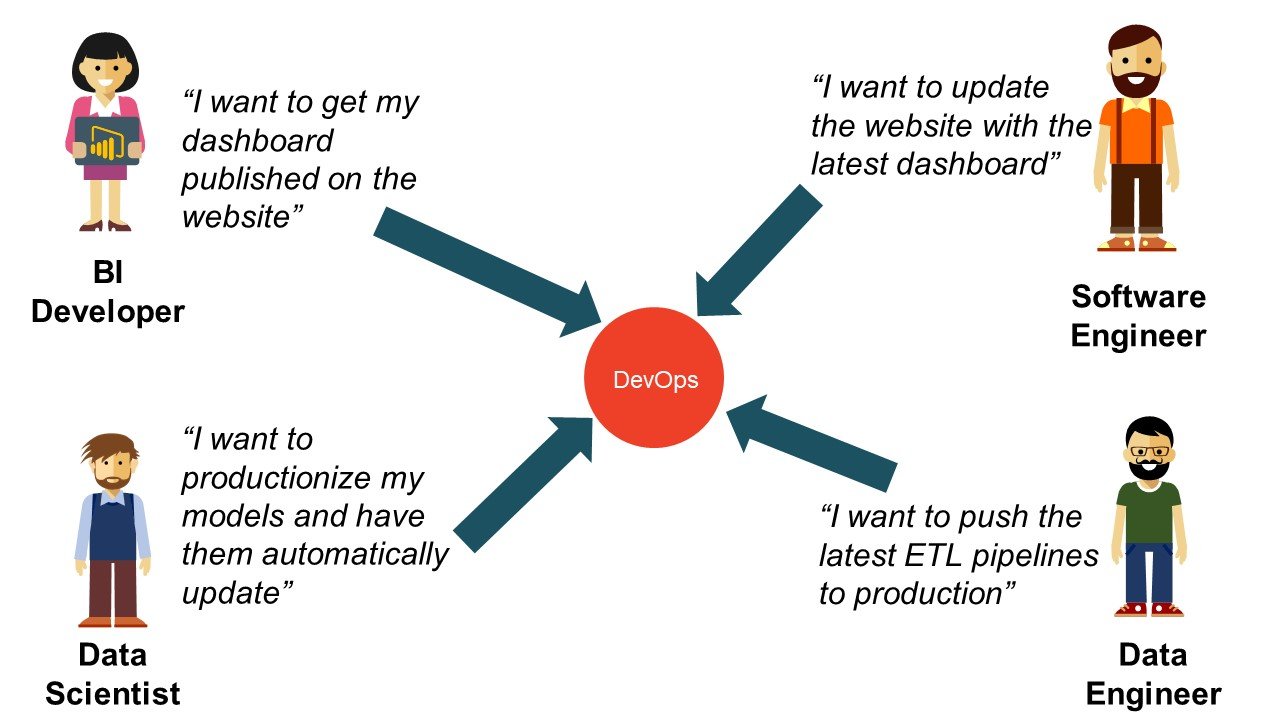

It’s important to first observe that all are looking for that one Holy Grail solution to address all Databricks DevOps requirements. However, it’s not always the same professionals asking that same crucial question. Those asking varies from Data Scientists to Data/Software Engineers, to Infrastructure/DevOps Specialists. So now ask yourself, will a solution that works for one always work for the other? The answer to that is categorically no.

What do we want to achieve?

So, you’ve got a variety of Data Professionals wanting to integrate and productionize their work. These professionals want to get their work out there, but at the same time feel in control and understand how this is done. Ideally, they want to be able to do this themselves, without having to ask the infrastructure/DevOps person(s) to do this for them.

That said, when integrating and productionizing there needs to be a level of testing, essentially gating, that is checking what is being integrated/productionized. It must be deemed worthy before being let loose! So, the Data Professionals should feel competent in this process, and secure in the knowledge it will catch issues/bugs.

The below diagram illustrates the DevOps dream of continuous integration and deployment. It is a well known diagram anyone working with CI/CD (Continuous Integration/Continuous Deployment) or IAC (Infrastructure as Code) is familiar with (or should be). This is our dream, and in this blog series we will explore the ways we can achieve this in Databricks, using different tooling.

What are the options?

Ok, so we know what we want to achieve, the question now is how? What tooling is there to make this easier/achievable? In this blog series we will look at some of the most popular options, including:

Implementation

· Databricks Rest API (& Python)

· Terraform

· Pulumi

DevOps Platforms

· Azure DevOps

· GitHub (Actions, GitLab)

Which one to choose?

This is where people look for the Holy Grail, but, as mentioned before it really depends on your team and their skill set, we want everyone to own and orchestrate this, not just that one person who happens to be good at DevOps, it really breaks the DevOps model and creates bottle necks. In the blogs following this we will deep dive into the tooling mentioned above and more, with really world examples, for you to experiment with and use yourselves

GitHub Repos

Databricks Rest API (& Python)

https://github.com/AnnaWykes/devops-for-databricks/

Terraform101

https://github.com/AnnaWykes/terraform101

Pulumi & Databricks

Videos

Databricks Rest API (& Python)

https://www.youtube.com/watch?v=P8Yki0BCP44&t=2s

Terraform101

Click here for the next blog DevOps for Databricks: Databricks Rest API & Python

Topics Covered :

Author

Anna Wykes